OpenAPI to gRPC with Quarkus

- 6 minutes read - 1169 words

Introduction

REST (OpenAPI) and gRPC are two of the most popular formats for APIs. REST is the style of choice of most public APIs, and gRPC is a popular alternative for internal APIs that need an efficient network.

The OpenAPI Specification defines a standard to describe REST APIs and their capabilities.

By default, gRPC uses Protocol Buffers, Google’s open-source mechanism for serializing structured data. The Protocol buffer schema is also an API specification.

TM Forum is a global association for organizations in the telecommunications industry. TM Forum has developed a set of OpenAPI specifications covering the telco domain.

Use Case

This article was born as I wanted to familiarize myself with gRPC and understand its benefits and constraints. Yet, with OpenAPIs used extensively nowadays due to the API-First Approach, it did not feel right to create a simple API out of my head.

Instead, what would happen if we implemented a mature OpenAPI with gRPC? TM Forum APIs are a perfect fit for our purpose, as they are complex but implementation agnostic.

TL;DR

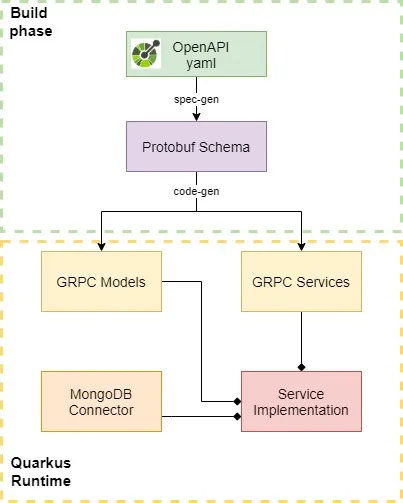

A POC of a GRPC service, using only an OpenAPI specification as input and everything else generated from that.

GitHub - Gorosc/openapi-to-grpc-quarkus

Design Choices

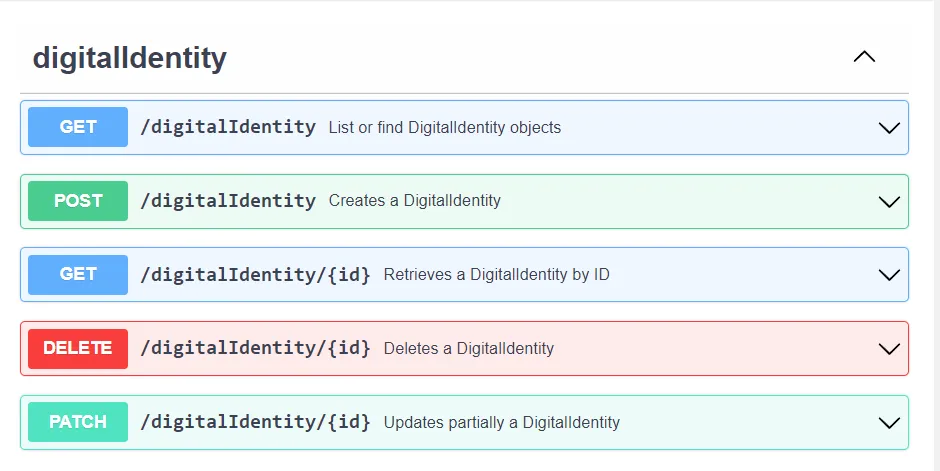

I chose TMF 720 Digital Identity API, one of the latest additions in the TMF’s API Table. It provides the ability to manage a digital identity (credentials, passwords, biometrics, etc.). The business logic is not that important in the scope of this POC. It is a mature API but not too bloated as older TMF APIs, and we will implement it as a CRUD API.

For the implementation, the tech choices are:

-

Quarkus framework: We could implement the Service using plain Java and the default server included in gRPC. However, I chose a framework since we do have to manage configuration and database connections. I did not feel like implementing those areas by hand. Quarkus has first-class support for gRPC.

-

MongoDB: The API has a complex schema. Its separate entities don’t have much meaning alone. Thus we want to save the whole model as a Document, and MongoDB is the first that comes to mind.

-

OpenAPI Generator is used to generate the Protobuf schema from the OpenAPI.

Solution

After evaluating gnostic, google’s tool, I decided to the OpenAPI generator. OpenAPI generator a) comes as a maven plugin and can be used in our build chain b) breaks messages and services into different proto files. As a result, the gRPC generator will produce more accessible files to work within our IDE.

In the absence of time, this POC is not production-ready. Validation and proper error handling are missing. But, following a test-driven development approach, all main flows are covered.

Implementation

Setup

Our maven setup will include Quarkus and the necessary dependencies for gRPC and Mongo:

OpenAPI generator comes into play as a plugin. It will be the first plugin in our build chain. The critical part of this configuration is the output folder /src/main/proto as this is where Quarkus expects to find the proto schemas.

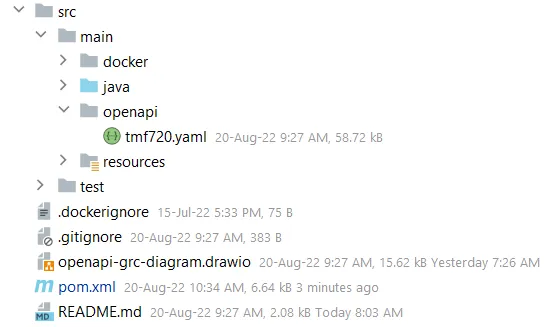

Our initial file structure will look like this:

Generation

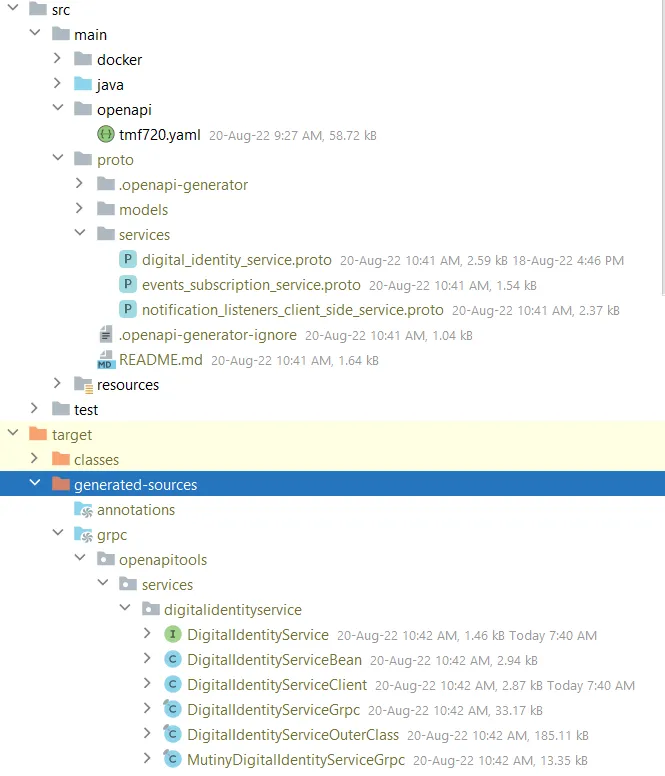

Let’s go ahead and invoke “mvn compile.” The plugins will execute sequentially, and the outcome will be like the following:

The OpenAPI generator plugin has populated our /src/main/proto folder with the generated protobuf schema for the services and the models. We will use the digital_identity_service.proto for our use case. It looks like this:

We are going to implement each of the methods of the gRPC Service. Quarkus has generated an interface for the service that we can use, automatically making it reactive with Mutiny

We could use the default gRPC Java implementation DigitalIdentityServiceGrpc.DigitalIdentityServiceImplBase. However, since we are using Quarkus it made sense to go reactive. That way this POC is more usable as a reference implementation.

Create Digital Identity

Create is the best option for our first implementation to have some data available for our following operations.

The TMF specification utilizes different models for Create, Read and Update. As a result, the code generation produces multiple gRPC Model Classes that one another cannot map directly.

- DigitalIdentity

- DigitalIdentityCreate

- DigitalIdentityUpdate

Solution: We will use com.google.protobuf.util.JSONFormat to serialize the gRPC Models to JSON. The difference in those models is only some fields (id, status, createdAt etc.). Thus, deserializing the JSON to a different Model is an efficient mapping method. This is not an overhead as we also need JSON serialization for Mongo (check below).

Hypermedia is not defined in either protobuf or gRPC. I still found it interesting to populate the href attribute with the method and the input required to fetch the specific Digital Identity.

We don’t want to spill any gRPC logic to our DB domain and Mongo Connector. Since we don’t have Models for Persistence, our Mongo Service will accept JSON formatted Strings as input and return as output. Of course, this has the undesired effect of having unvalidated and untyped input in our database. We are going to accept the risk in the scope of this POC.

List Digital Identities

Similarly, we retrieve the results from Mongo and use JSONFormat to deserialize them into gRPC Models. We also map the Multi that we receive from the database to a Uni. We collect all results to a List and add them to the Uni response.

In Mongo, we are using as id the native _id ObjectId. But, since this is not part of our specification (and thus, the JSON deserialization will fail), we replace it with id.

Retrieve Digital Identity

Retrieving a single digital identity and mapping is the same as List.

As we search with _id, we don’t expect more than one result. The gRPC service does not need to know the detail that Mongo still returns a Multi in this case.

Delete Digital Identity

Empty in gRPC is an object we need to create and send back.

The Mongo delete operation returns a void. I challenged myself to make it return the document or a long (number of hits) but settled with a simple void.

Patch Digital Identity

Patch is very like Create, but we extract two arguments from the request, and the Mongo service returns the document.

For the update to be a merge patch as the operation dictates, we need to use the $set operator.

Wrap Up

We created the service, and we fulfilled our basic requirements. All service-related code is generated from the OpenAPI specification. We implemented the glue code. However, it could probably also be part of the generation.

I also came to some valuable insights:

- gRPC is more of a framework than a specification

- Protobuff schema has less detail than the OpenAPI, and the generation left out some information like the business errors and codes.

- Protobuf Util JSONFormat can be used to create JSON documents for Mongo DB storage.

- JSONFormat can also be used to map between similar objects.

- Since we have a more efficient message format for our service network layer, the JSON serialization needed for Mongo strikes me as odd.